Robot Pose Tracking in the Wild

Solving the robot pose is a fundamental requirement for vision-based robot control, and is a process that takes considerable effort and care to make accurate. Traditional approaches require modification of the robot via markers which make them unsuitable in most unstructured environments. Our goal is to investigate the possibilities of tracking robots in the wild, in unforseen scenarios, to pursue autonomous robot-camera calibration, dynamic visual servoing, and even transfer learning. In this project, we utilize advanced computer vision and robot state estimation techniques for estimating the robot's pose in dynamic environments. We focus on tracking the pose of various robots, including robot manipulators, surgical robots, and snake robots, and consider how foundation models may both leverage and be leveraged by these techniques.

Students & Collaborators

- Jingpei Lu

- Shan Lin

- Florian Richter

- Lucas Liang

- Tristin Xie

Publications

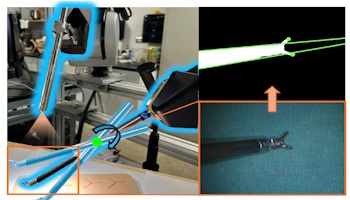

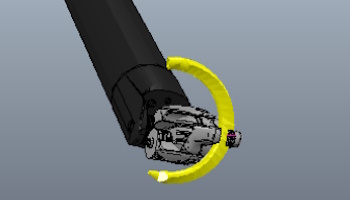

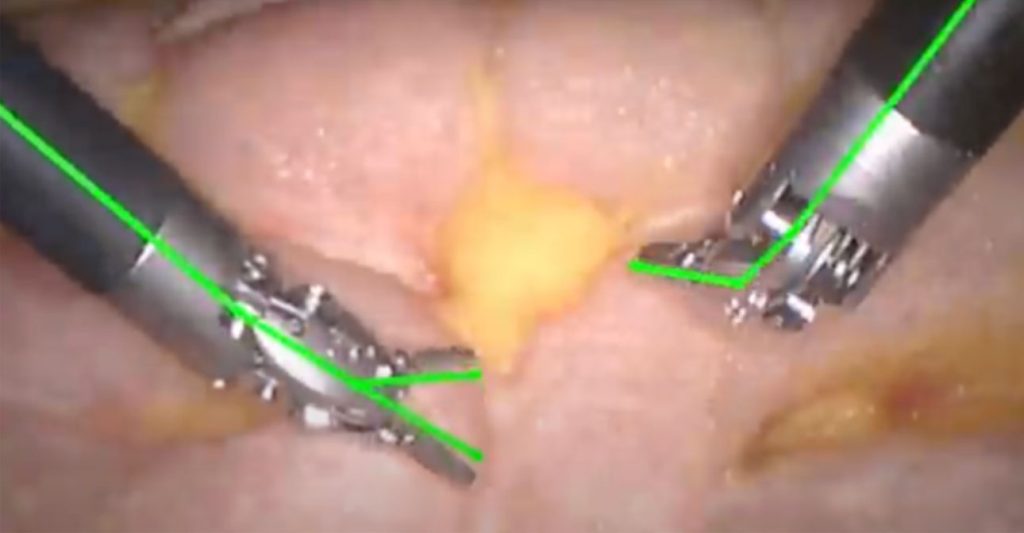

Differentiable Rendering-based Pose Estimation for Surgical Robotic Instruments

arXiv preprint arXiv:2503.05953 (2025)

Zekai Liang, Zih-Yun Chiu, Florian Richter, Michael C Yip

KineDepth: Utilizing Robot Kinematics for Online Metric Depth Estimation

arXiv preprint arXiv:2409.19490 (2025)

Soofiyan Atar, Yuheng Zhi, Florian Richter, Michael Yip

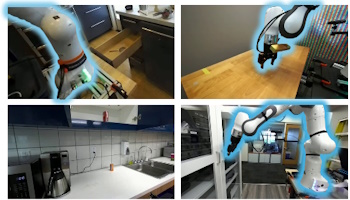

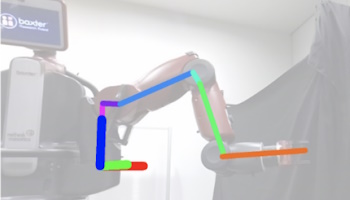

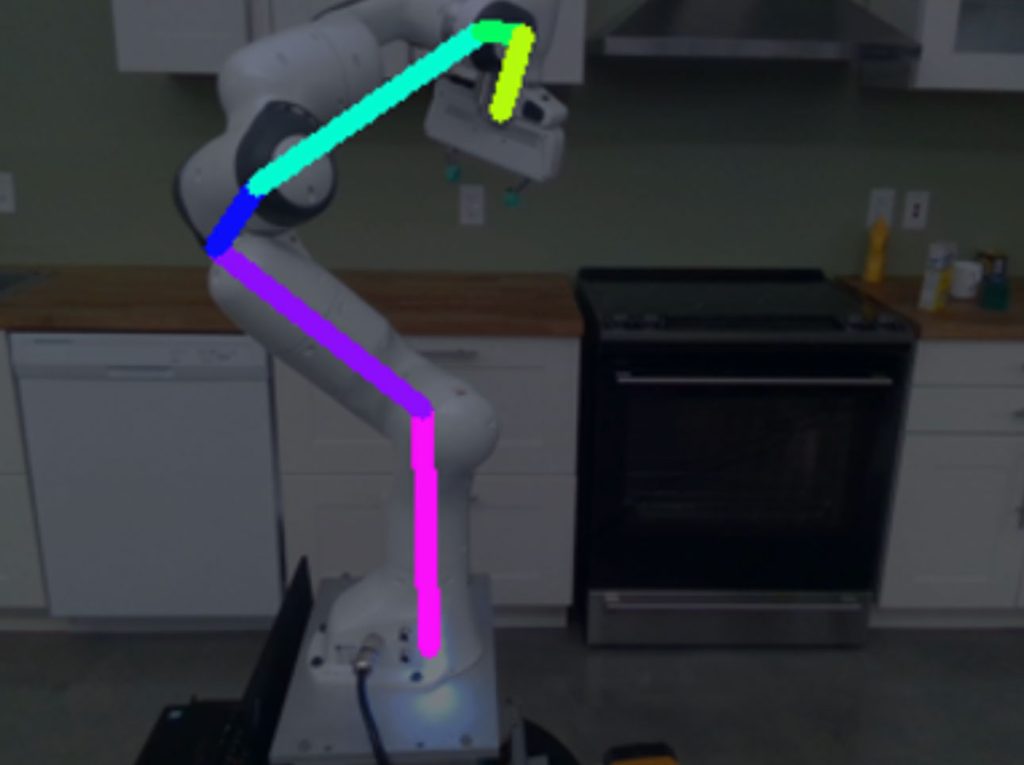

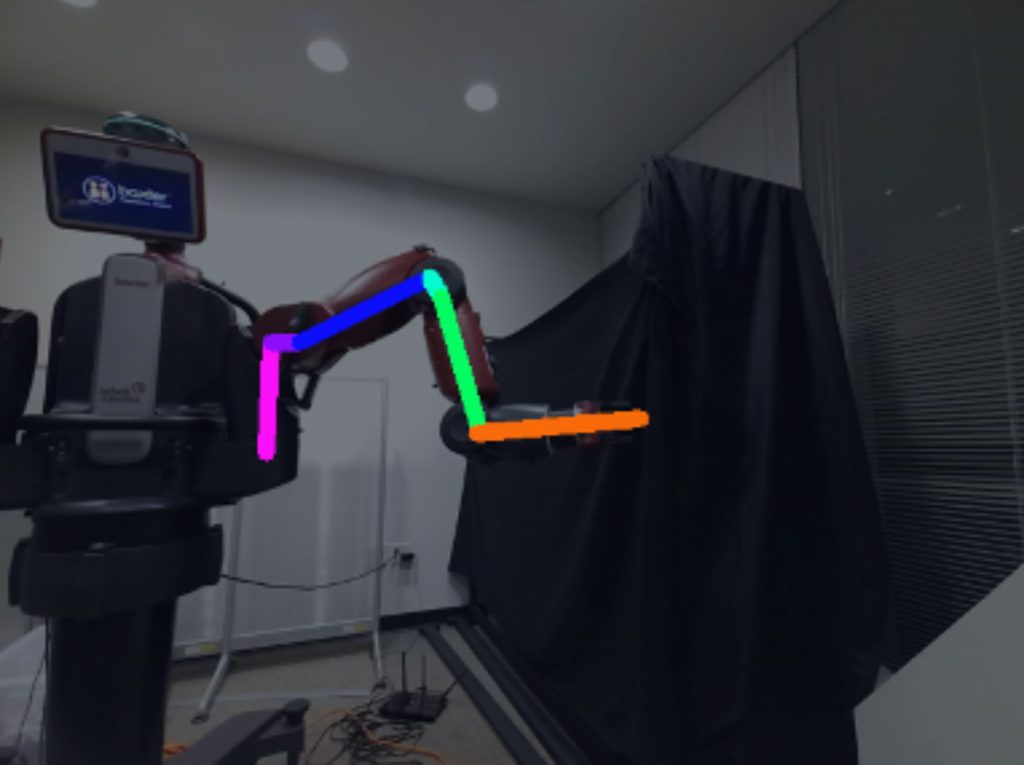

CtRNet-X: Camera-to-Robot Pose Estimation in Real-world Conditions Using a Single Camera

Proc. IEEE International Conference on Robotics and Automation (ICRA) (2025)

Jingpei Lu, Zekai Liang, Tristin Xie, Florian Ritcher, Shan Lin, Sainan Liu, Michael C Yip

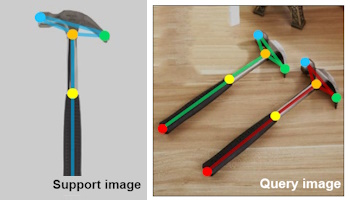

AnyOKP: One-Shot and Instance-Aware Object Keypoint Extraction with Pretrained ViT

Proc. IEEE Conference on Robotics and Automation (ICRA) (2024)

Fangbo Qin, Taogang Hou, Shan Lin, Kaiyuan Wang, Michael C Yip, Shan Yu

Real-time constrained 6d object-pose tracking of an in-hand suture needle for minimally invasive robotic surgery

Proc. IEEE International Conference on Robotics and Automation (ICRA) (2023)

Zih-Yun Chiu, Florian Richter, Michael C Yip BEST PAPER AWARD

Image-based pose estimation and shape reconstruction for robot manipulators and soft, continuum robots via differentiable rendering

Proc. IEEE International Conference on Robotics and Automation (ICRA) (2023)

Jingpei Lu, Fei Liu, Cedric Girerd, Michael C Yip

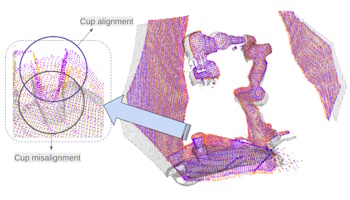

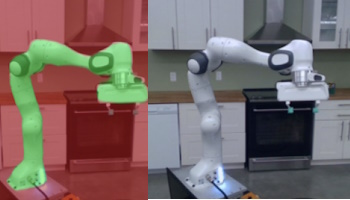

Markerless camera-to-robot pose estimation via self-supervised sim-to-real transfer

Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2023)

Jingpei Lu, Florian Richter, Michael C Yip

Pose estimation for robot manipulators via keypoint optimization and sim-to-real transfer

IEEE Robotics and Automation Letters (2022)

Jingpei Lu, Florian Richter, Michael C Yip

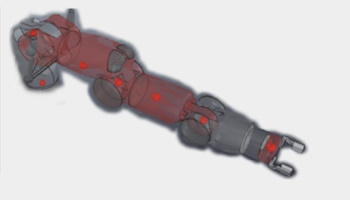

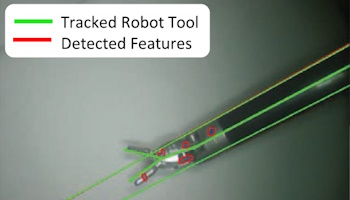

Robotic tool tracking under partially visible kinematic chain: A unified approach

IEEE Transactions on Robotics (2021)

Florian Richter, Jingpei Lu, Ryan K Orosco, Michael C Yip