Robot Learning Foundations

Robots in the wild are those that are operating in unseen, complex, challenging environments under new environmental conditions. Most of the time, robot policies and controllers, and even self-sensing (i.e., what is my current status) fail when taken from a controlled environment into the real world.

This research focuses on a fundamentally learning skills either on the fly (online reinforcement), or by leveraging past experiences (imitation and transfer) to as-quickly-as-possible respond to new, unseen environments. This includes robots that create a model of their motions as they move, to ones that solve for controllers or policies online as more data is collected in their new environments. This research also involves transferring knowledge between reality and simulation, where reinforcement learning can be used to guide exploration of the robot's capabilities while exploiting what they have already learned about themselves and their environments.

This research topic is generally open-ended by nature, as robots that monitor their own behavior, that explore new motions, and that adapt to environment variables has broad applications.

Students & Collaborators

-

Jingpei Lu

- Zihyun Chiu

- Nikhil Shinde

Recent Publications

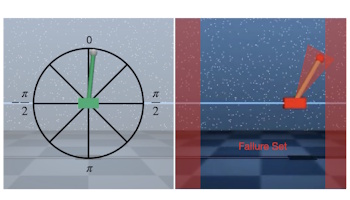

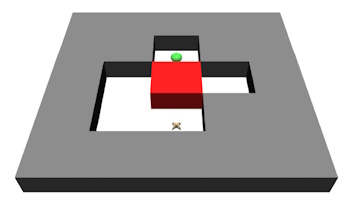

Back to Base: Towards Hands-Off Learning via Safe Resets with Reach-Avoid Safety Filters

Learning for Decision and Control Conference (L4DC) (2025)

Azra Begzadić, Nikhil Uday Shinde, Sander Tonkens, Dylan Hirsch, Kaleb Ugalde, Michael C Yip, Jorge Cortés, Sylvia Herbert

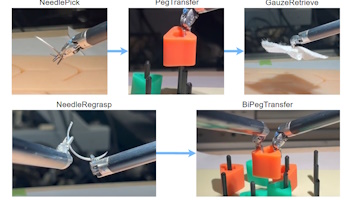

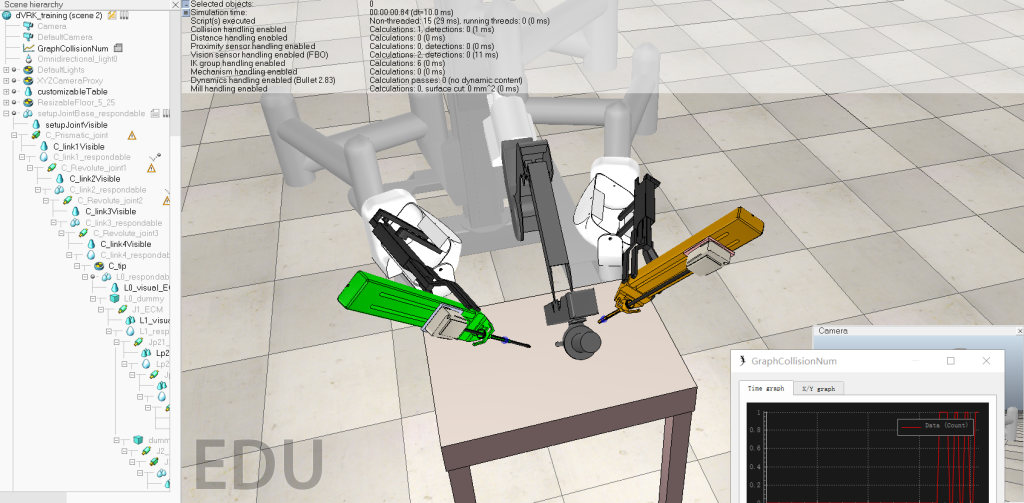

SurgIRL: Towards Life-Long Learning for Surgical Automation by Incremental Reinforcement Learning

arXiv preprint arXiv:2409.15651 (2025)

Yun-Jie Ho, Zih-Yun Chiu, Yuheng Zhi, Michael C Yip

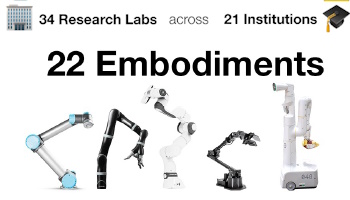

Open x-embodiment: Robotic learning datasets and rt-x models

Proc. IEEE International Conference on Robotics and Automation (ICRA) (2024)

Open X-Embodiment Collaboration BEST PAPER AWARD

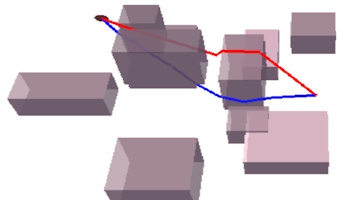

Zero-Shot Constrained Motion Planning Transformers Using Learned Sampling Dictionaries

Proc. IEEE Conference on Robotics and Automation (ICRA) (2024)

Jacob J Johnson, Ahmed H Qureshi, Michael C Yip

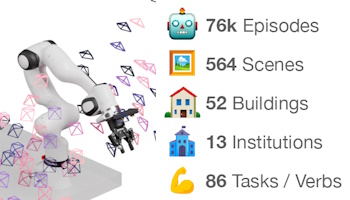

DROID: A large-scale in-the-wild robot manipulation dataset

Robotics: Science and Systems (2024)

Alexander Khazatsky, Karl Pertsch, Suraj Nair, Ashwin Balakrishna, Sudeep Dasari, Siddharth Karamcheti, Soroush Nasiriany, Mohan Kumar Srirama, Lawrence Yunliang Chen, Kirsty Ellis, Peter David Fagan, Joey Hejna, Masha Itkina, Marion Lepert, Yecheng Jason Ma, Patrick Tree Miller, Jimmy Wu, Suneel Belkhale, Shivin Dass, Huy Ha, Arhan Jain, Abraham Lee, Youngwoon Lee, Marius Memmel, Sungjae Park, Ilija Radosavovic, Kaiyuan Wang, Albert Zhan, Kevin Black, Cheng Chi, Kyle Beltran Hatch, Shan Lin, Jingpei Lu, Jean Mercat, Abdul Rehman, Pannag R Sanketi, Archit Sharma, Cody Simpson, Quan Vuong, Homer Rich Walke, Blake Wulfe, Ted Xiao, Jonathan Heewon Yang, Arefeh Yavary, Tony Z Zhao, Christopher Agia, Rohan Baijal, Mateo Guaman Castro, Daphne Chen, Qiuyu Chen, Trinity Chung, Jaimyn Drake, Ethan Paul Foster, Jensen Gao, David Antonio Herrera, Minho Heo, Kyle Hsu, Jiaheng Hu, Donovon Jackson, Charlotte Le, Yunshuang Li, Kevin Lin, Roy Lin, Zehan Ma, Abhiram Maddukuri, Suvir Mirchandani, Daniel Morton, Tony Nguyen, Abigail O'Neill, Rosario Scalise, Derick Seale, Victor Son, Stephen Tian, Emi Tran, Andrew E Wang, Yilin Wu, Annie Xie, Jingyun Yang, Patrick Yin, Yunchu Zhang, Osbert Bastani, Glen Berseth, Jeannette Bohg, Ken Goldberg, Abhinav Gupta, Abhishek Gupta, Dinesh Jayaraman, Joseph J Lim, Jitendra Malik, Roberto MartÃn-MartÃn, Subramanian Ramamoorthy, Dorsa Sadigh, Shuran Song, Jiajun Wu, Michael C Yip, Yuke Zhu, Thomas Kollar, Sergey Levine, Chelsea Finn BEST PAPER AWARD

Flexible attention-based multi-policy fusion for efficient deep reinforcement learning

Proc. Advances in Neural Information Processing Systems (NeurIPS) (2024)

Zih-Yun Chiu, Yi-Lin Tuan, William Yang Wang, Michael Yip

Towards Non-Parametric Models for Confidence Aware Image Prediction from Low Data using Gaussian Processes

Proc. IEEE International Conference on Automation Science and Engineering (CASE) (2024)

Nikhil U Shinde, Florian Richter, Michael C Yip

Object-Centric Representations for Interactive Online Learning with Non-Parametric Methods

Proc. IEEE International Conference on Automation Science and Engineering (CASE) (2023)

Nikhil U Shinde, Jacob Johnson, Sylvia Herbert, Michael C Yip

Artificial intelligence meets medical robotics

Science (2023)

Michael Yip, Septimiu Salcudean, Ken Goldberg, Kaspar Althoefer, Arianna Menciassi, Justin D Opfermann, Axel Krieger, Krithika Swaminathan, Conor J Walsh, He Huang, I-Chieh Lee

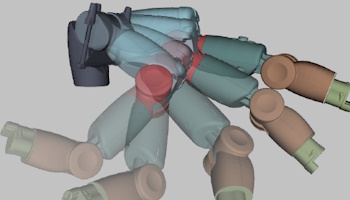

Parameter identification and motion control for articulated rigid body robots using differentiable position-based dynamics

arXiv preprint arXiv:2201.05753 (2022)

Fei Liu, Mingen Li, Jingpei Lu, Entong Su, Michael C Yip

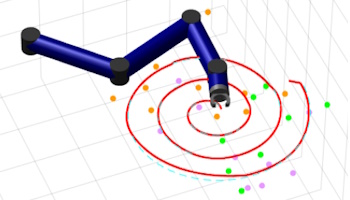

SOLAR-GP: Sparse online locally adaptive regression using Gaussian processes for Bayesian robot model learning and control

IEEE Robotics and Automation Letters (2020)

Brian Wilcox, Michael C Yip

Active continual learning for planning and navigation

Proc. ICML Workshop on Real World Experiment Design and Active Learning (2020)

Ahmed H Qureshi, Yinglong Miao, Michael C Yip

Composing ensembles of policies with deep reinforcement learning

Proc. International Conference on Learning Representations (ICLR) (ICLR) (2020)

Ahmed Hussain Qureshi, Jacob J Johnson, Yuzhe Qin, Byron Boots, Michael C Yip

Adversarial imitation via variational inverse reinforcement learning

Proc. International Conference on Learning Representations (ICLR) (2018)

Ahmed H Qureshi, Byron Boots, Michael C Yip